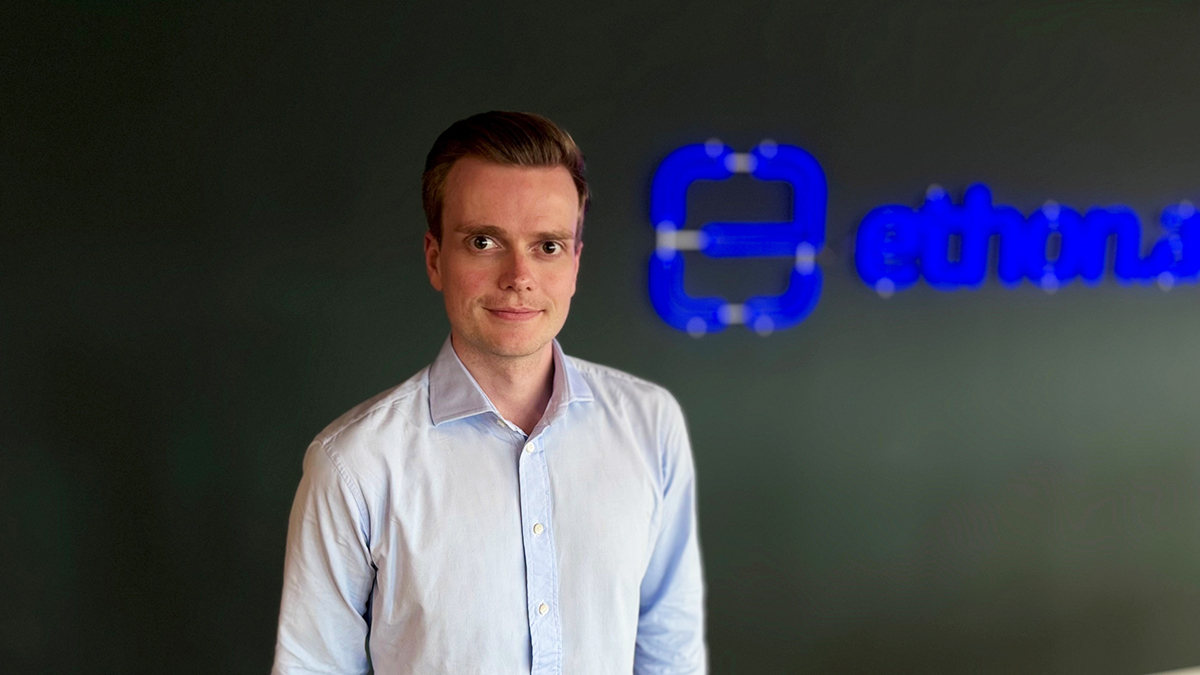

Written by Julian Senoner, Co-Founder and CEO of EthonAI

British industrialist Charles Babbage (1791-1871) once said, “Errors using inadequate data are much less than those using no data at all.” Three industrial revolutions later, it’s surprising how often decisions are still made on gut feeling without data. But it doesn’t have to be like that. The distinguishing factor of the ongoing fourth industrial revolution is the unparalleled access to data. However, having data is one thing; another is to make good use of them. That’s where AI comes in.

Business and policymaking revolve around fact-based decision-making. Facts, as verified truths, emerge from data analysis and interpretation. The challenge lies in gathering and presenting data to transform it into actionable knowledge. Luckily, advancements in computer science and information technologies have significantly improved access to data for decision-makers. In addition, AI can provide data-driven insights previously unavailable to humans. However, AI isn’t a silver bullet. A special type of AI is needed.

With the recent rise of AI models there have been impressive developments in both creative content generation as well as automation. Yet, when it comes to decision-making, AI still faces two critical challenges. First, AI models’ complexity and opacity can hinder trust and adoption. Many operate as “black-boxes,” making it difficult for users to understand the basis of their suggestions. This lack of transparency leads to distrust among domain experts who cannot validate AI outputs against their expertise. Second, AI systems struggle with causal reasoning, a vital part of decision-making. While identifying correlations is useful, understanding the causality behind events is a game-changer.

The key to addressing AI’s two drawbacks is to design systems that are explainable and can be augmented with domain knowledge. A compelling illustration of this is our field experiment conducted with Siemens. In this experiment, factory workers engaged in a task of visually inspecting the quality of electronic products were divided into two groups: one aided by a “black-box” AI and the other by AI capable of providing explanations for its recommendations. The group using the explainable AI significantly outperformed those factory workers who got recommendations from the “black-box” AI. Interestingly, users with the explainable AI system understood better when to trust the AI and when to rely on their own domain expertise, thereby surpassing the AI system’s performance when used alone. Thus, when humans collaborate with AI, the outcomes are superior compared to letting the AI make decisions independently!

Explainability is not only helpful for creating trust among decision-makers. It can also be leveraged to get the best out of humans and AI strengths. AI can sift through amounts of data, while humans provide a nuanced understanding of processes to discern cause-and-effect. For instance, consider our research in a semiconductor fabrication facility, where we provided process experts with explainable AI tools to identify the root causes of quality issues. Although the AI revealed complex correlations between various production factors and the quality of the outcomes, it was the experts who transformed these findings into practical measures. They used AI insights and their domain knowledge to devise experiments, verifying the causes of quality losses. The result? Quality losses plummeted by over 50%. This case emphasizes the indispensable role of human expertise in interpreting data and applying it within the context of established cause-and-effect relationships.

The key message is that data are proliferating, and AI is here to help, but without the human in the loop, don’t expect better decision-making. We need to build AI systems that expedite the human decision-maker’s access to facts. The recent developments in Explainable AI and Causal AI offer a promising path forward. Such tools allow users to understand AI systems’ inner workings and incorporate their own domain knowledge when evaluating AI output. They help explain the causal relations and patterns identified by AI from the data, ultimately empowering decision-makers to make better decisions.

Science, technology and innovation can be catalysts for achieving the sustainable development goals.

In the context of the UN Commission on Science and Technology for Development, the CSTD Dialogue brings together leaders and experts to address this question and contribute to rigorous thinking on the opportunities and challenges of STI in several crucial areas including gender equality, food security and poverty reduction.

The conversation continues at the annual session of the Commission on Science and Technology for Development and as an online exchange by thought leaders.